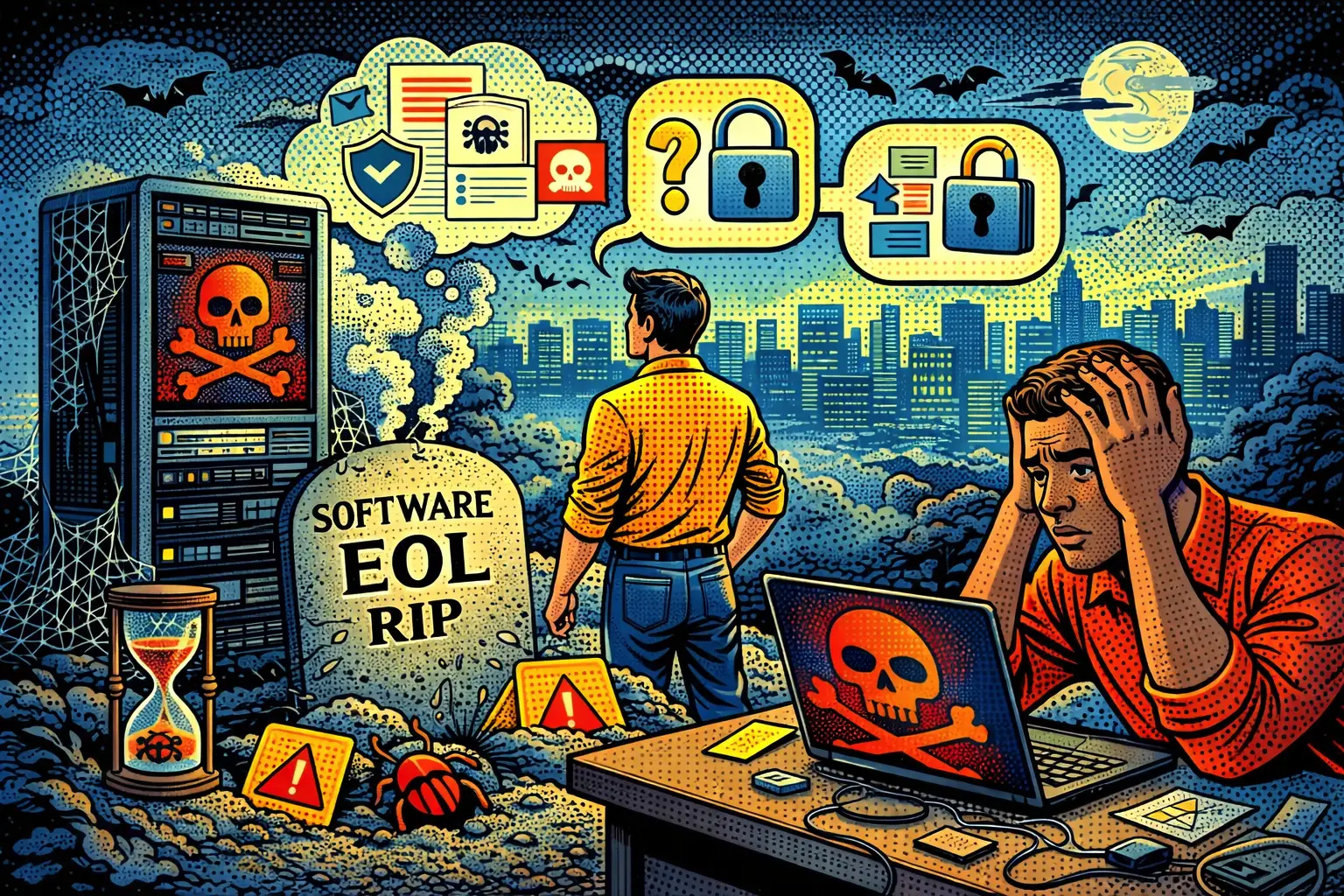

I'm supposed to 'shift left' and review designs before code gets written. But nobody loops me in until features are already built, timelines are locked, and asking for changes means I'm a "bottleneck". My team reviews maybe 10% of what ships. The other 90%? I just leave to faith.

The Stressed Out Security Champion

.png)